The generation of high-quality images has become widely accessible and is a rapidly evolving process. As a result, anyone can generate images that are indistinguishable from real ones. This leads to a wide range of applications, including malicious usage with deceptive intentions. Despite advances in detection techniques for generated images, a robust detection method still eludes us. Furthermore, model personalization techniques might affect the detection capabilities of existing methods. In this work, we utilize the architectural properties of convolutional neural networks (CNNs) to develop a new detection method. Our method can detect images from a known generative model and enable us to establish relationships between fine-tuned generative models. We tested the method on images produced by both Generative Adversarial Networks (GANs) and recent large text-to-image models LTIMs that rely on Diffusion Models. Our approach outperforms others trained under identical conditions and achieves comparable performance to state-of-the-art pre-trained detection methods on images generated by Stable Diffusion and MidJourney, with significantly fewer required train samples.

Convolutional Neural Networks (CNNs) have a tendency to produce unique image features known as “fingerprints”. We use this phenomenon to train an additional CNN to mimic the fingerprint of a target image generator. We call this method “Deep Image Fingerprint” (DIF).

DIF has a few applications. Firstly, with DIF we achieved high accuracy on detection of synthetic images. Secondly, we show that fine-tuned image generators preserve the same fingerprint as their source model. Therefore, with DIF we trace the origin of fine-tuned models.

Although CNNs have great image generation capabilities, they fail to accurately reproduce (Ŷ) images without semantic information (Y). Here we show the convergence of popular U-Net model on this task. Despite similarity between Ŷ and Y in image space, model leaves it's fingerprint which is observed well in Fourier space (FFT{Ŷ}).

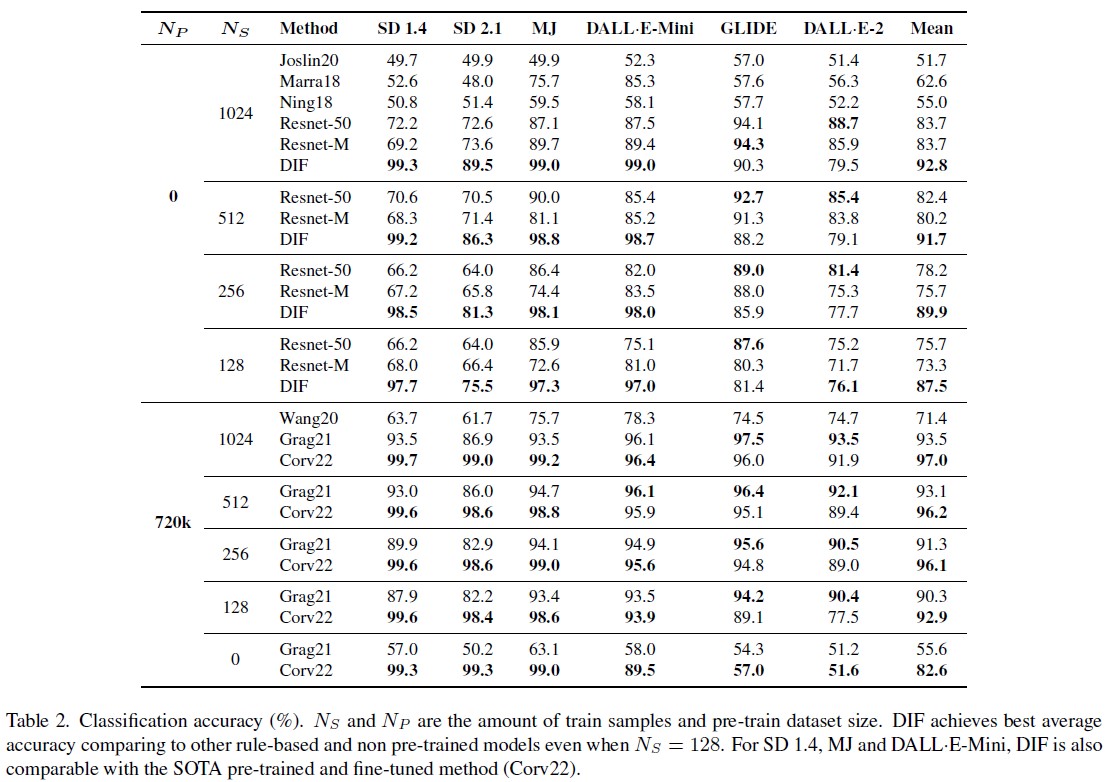

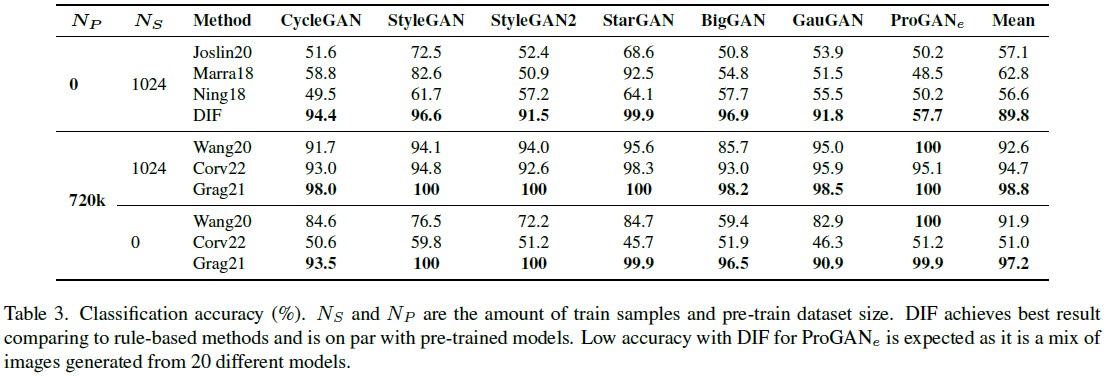

DIF outperforms other methods on average when they are trained under similar conditions. In some cases, it is on par with pre-trained and fine-tuned SOTA methods, while requiring a significantly smaller number of training samples. Below we show detection results for images generated by both LTIMs and GANs.

We show that image generators retain their unique fingerprints even after being fine-tuned on new data. Following this discovery, we observed that an early version of MidJourney was a pre-trained stable diffusion v1.x model. Below is an exaple of cross-detection results for fine-tuned Stable Diffusion models (left) and cross-detection results for LTIMs (right). Observe that Mid-Journey behaves the similar to fine-tuned models of Stable Diffusion v1.4.

If you find this research useful, please cite the following:

@misc{sinitsa2023deep,

title={Deep Image Fingerprint: Accurate And Low Budget Synthetic Image Detector},

author={Sergey Sinitsa and Ohad Fried},

year={2023},

eprint={2303.10762},

archivePrefix={arXiv},

primaryClass={cs.CV}

}